ICMI-MLMI 2009 at MIT

I’m visiting Boston, USA, right now to attend the Eleventh International Conference on Multimodal Interfaces and the Sixth Workshop on Machine Learning for Multimodal Interaction – ICMI-MLMI 2009. This is the most important conference on the field of Multimodal Interaction, which is one of my PhD’s case studies.

Yesterday I made my presentation entitled “A Fusion Framework for Multimodal Interactive Applications”, which is one of the applications of my PhD research. I never spent time in this blog to explain what is multimodal interaction and neither multimodal fusion, but let me do it shortly now: multimodal interaction is the possibility to interact with systems using more than one modality (vision, auditory, touching, etc.) and each modality has a different or complementary influence on the interaction. For example: an text processing software that supports keyboard and also speech recognition as ways to input text. Multimodal fusion is the possibility to use information from two or more modalities, in order to improve a less precise modality or realise more complex meanings by combining data from those modalities. For example: to get better results on speech recognition, a vision system could be used to perform lips reading, improving the probability that a certain word was said in case of uncertainty.

Well, my presentation was good and their questions were tough but they were satisfied with my answers and some of them even sent emails in private, asking for the presentation. I would say that this was the toughest audience that I’ve ever had. A conference at MIT normally attracts many big names in the field of multimodal interaction. The environment is really appropriate (and magic) to discuss about research and innovation. This year the conference had a big emphasis on robotics, with exciting demos and impressive results on interaction and learning with humans. We also had the chance to make a tour through the lab, visiting different projects and watching live demonstrations (some pictures below).

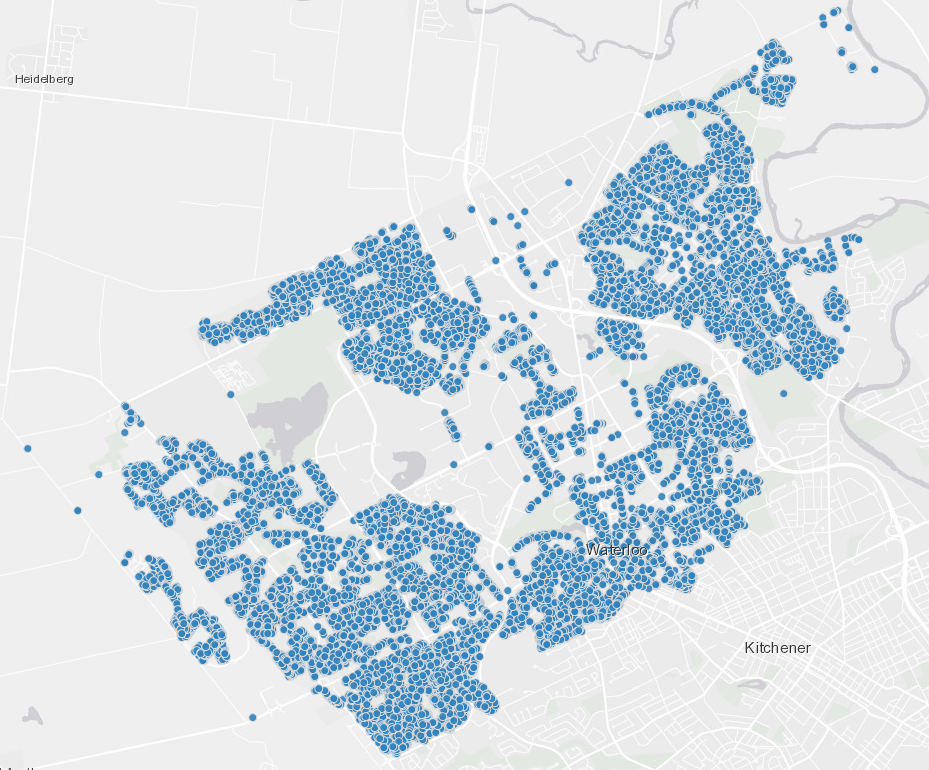

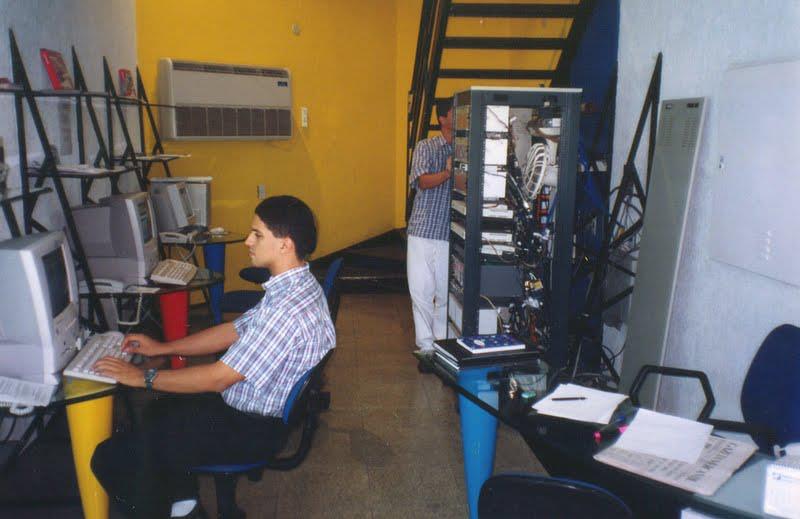

One of the dozens of rooms in the MIT Media Lab

One of the dozens of rooms in the MIT Media Lab

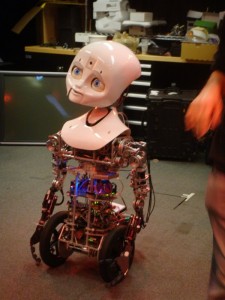

Social and emotional interaction with a child robot

Social and emotional interaction with a child robot

The experience of visiting the MIT Media Lab was unique. This is a space where people are totally free to create even if ideas sound totally ridiculous. At the end, they find a way to exploit those ideas. It shows that, sometimes, real problems can also block people’s creativity and great ideas also come from nowhere.

Recent Posts

Can We Trust Marathon Pacers?

Introducing LibRunner

Clojure Books in the Toronto Public Library

Once Upon a Time in Russia

FHIR: A Standard For Healthcare Data Interoperability

First Release of CSVSource

Astonishing Carl Sagan's Predictions Published in 1995

Making a Configurable Go App

Dealing With Pressure Outside of the Workplace

Reacting to File Changes Using the Observer Design Pattern in Go

Provisioning Azure Functions Using Terraform

Taking Advantage of the Adapter Design Pattern

Applying The Adapter Design Pattern To Decouple Libraries From Go Apps

Using Goroutines to Search Prices in Parallel

Applying the Strategy Pattern to Get Prices from Different Sources in Go